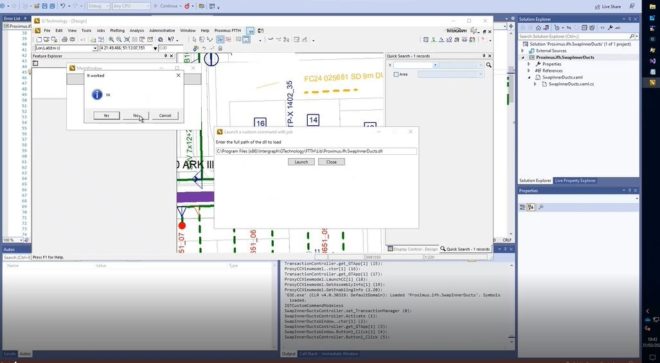

Shortening CustomCommand development time by using a proxy

One of the most time-consuming processes when developing with Hexagon G/Technology is creating custom commands. The usual development approach consists of the following iterative process: During testing,…

AdHoc Queries

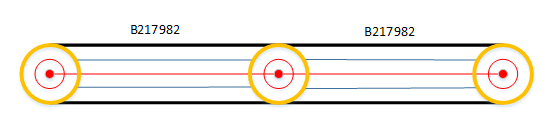

Introduction G/Technology provides functionality to run dynamic queries, the so called ‘Ad-Hoc queries’. A Large telecom provider in the Netherlands is moving Fiber Cables from CRAMER to…

Debugging G/Technology 10.2 with Visual Studio

In an earlier post Stephan mentions that it was not possible to debug G/Technology 10.2 in “edit and continue” mode. You could only attach Visual Studio to the G3E.exe…

Debugging Netviewer serverside pages

If you need to build server side pages in Netviewer, changes are likely that you want to debug them. First thing to do is to change the…

Validating a ConnectionConfigurationMap.ccm file : ccmReader

When setting up a G/Technology environment, one of the boring things always is getting your ConnectionConfigurationMap.ccm right. One way to see what ddc-file is causing you trouble…